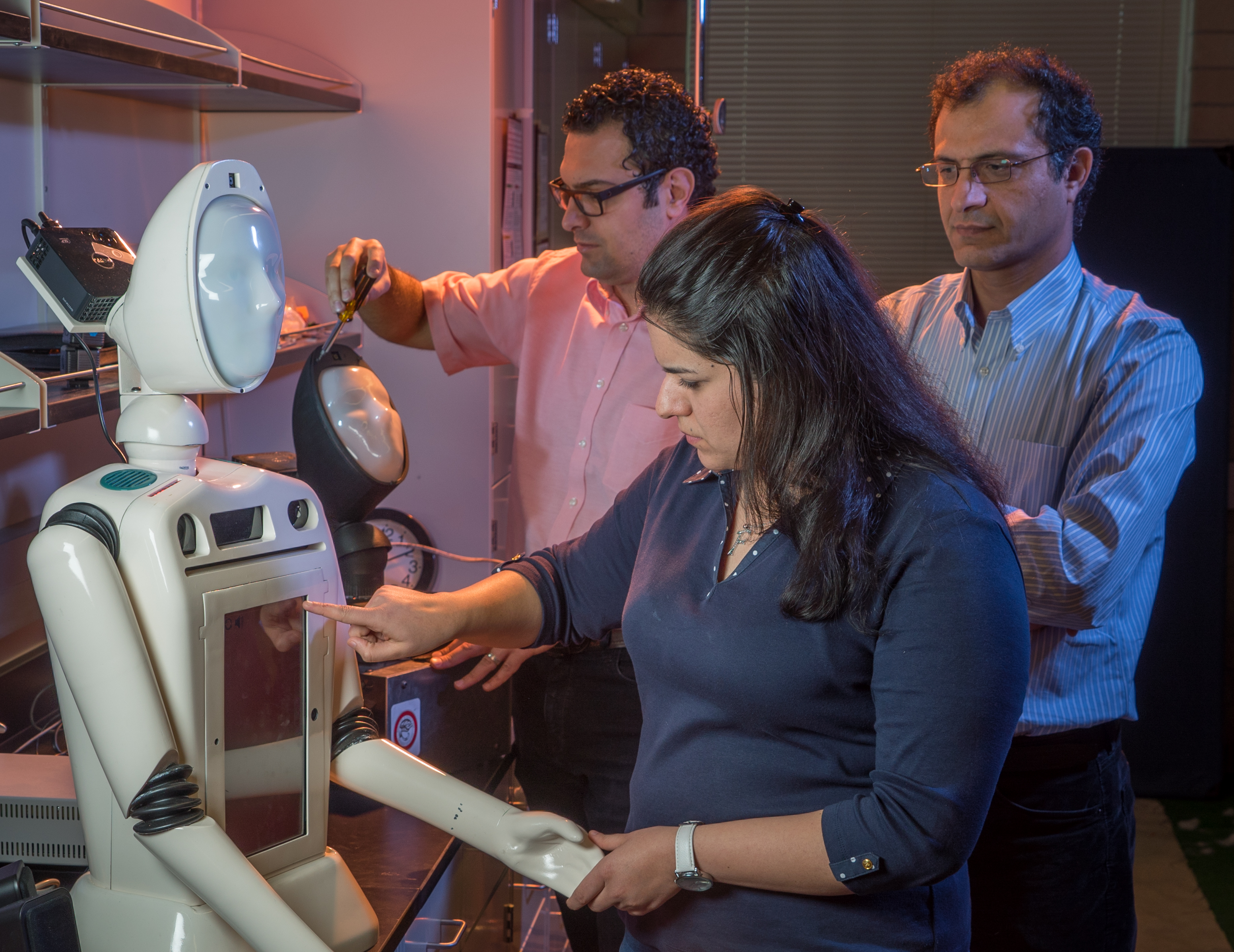

This project involving the development of SocioBot-SDS (SocioBot-Spoken Dialog System), an instrument in the form of a robotic character with an emotional response, is expected to advance research involving next generation human-machine interactions. The robotic instrument will be used for therapeutic and educational purposes. Its development will specifically contribute to the research area of perceived speech and visual behaviors. The components of the instrument integrate a unique level of programmability and robustness to the display of human- and non-human-like emotive gestures with conventional orientation control through an articulated neck. The work is expected to accelerate research and development of social robots that can accurately model the dynamics of face-to-face communication with a sensitive and effective human tutor, clinician, or caregiver to a degree unachievable with current instrumentation. The robotic agent builds on advances in computer vision, spoken dialogue systems, character animation and affective computing to conduct dialogues that establish rapport with users producing rich, emotive facial gestures synchronized with prosodic speech generations in response to users’ speech and emotions. The instrument represents a new level of integration of emotive capabilities that enable researchers to study socially emotive robots/agents that can understand spoken language, show emotions, and interact, speak, and communicate effectively with people.

This project involving the development of SocioBot-SDS (SocioBot-Spoken Dialog System), an instrument in the form of a robotic character with an emotional response, is expected to advance research involving next generation human-machine interactions. The robotic instrument will be used for therapeutic and educational purposes. Its development will specifically contribute to the research area of perceived speech and visual behaviors. The components of the instrument integrate a unique level of programmability and robustness to the display of human- and non-human-like emotive gestures with conventional orientation control through an articulated neck. The work is expected to accelerate research and development of social robots that can accurately model the dynamics of face-to-face communication with a sensitive and effective human tutor, clinician, or caregiver to a degree unachievable with current instrumentation. The robotic agent builds on advances in computer vision, spoken dialogue systems, character animation and affective computing to conduct dialogues that establish rapport with users producing rich, emotive facial gestures synchronized with prosodic speech generations in response to users’ speech and emotions. The instrument represents a new level of integration of emotive capabilities that enable researchers to study socially emotive robots/agents that can understand spoken language, show emotions, and interact, speak, and communicate effectively with people.

Related Publications:

- A. Mollahosseini, G. Graitzer, E. Borts, S. Conyers, R. M. Voyles, R. Cole, M.H. Mahoor, “An Emotive Lifelike Robotic Face for Natural Face-to-Face Communication”, 2014 IEEE-RAS International Conference on Humanoid Robots.

- Ali Mollahosseini, David Chan, Mohammad H. Mahoor, “Going Deeper in Facial Expression Recognition using Deep Neural Networks”, IEEE Winter Applications of Computer Vision (WACV), 2016 http://arxiv.org/abs/

1511.04110